7 Golden Rules of Link Building for 2022 and beyond

Auch verfügbar in Deutsch: 7 Goldene Regeln des Link-Building für 2022 und darüber hinausTable of Contents

7 Golden Rules of Link Building for 2022 and beyond #

- Link Relevance, Link Location, Useful Content

- Domain Trust

- Natural anchor text

- NoFollow links

- Links on Juicy pages

- No spam!

- Don’t buy links for PageRank

Prologue #

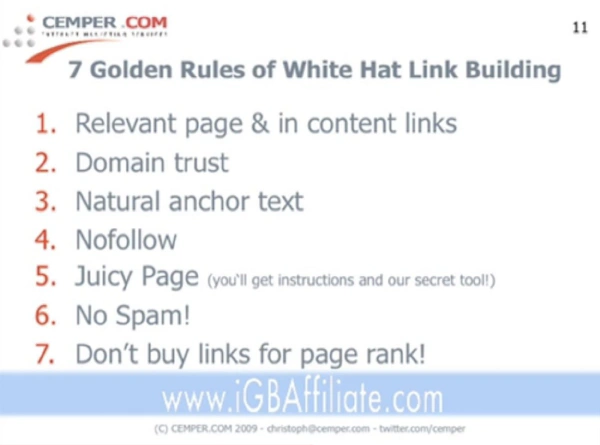

I introduced the world to my 7 Golden Rules of Link Building at A4UExpo in May 2009. You may think a lot has changed in SEO and Link Building since 2009, and it has. But my core values and concepts of link building have not changed.

I revisited and updated my original “Golden Rules” for 2022. I tried to incorporate more common phrases like “traffic links” for “links on juicy pages” and also new fancy words like “niche edits”. I think it’s important to highlight some key concepts here, which expand a lot of what some SEO expert courses are teaching wrong or incomplete, even in 2022.

Rules of Link Building have not changed much #

Just like I said in 2009, there’s too much wrong or outdated information on link building out there; the Golden Rules of Link Building can be used as a link building quality checklist for every link builder, still today. No tools are required at all if you read and understand the concepts, but they can help.

Since 2009 we’ve published parts of our own, previously only internal, link building toolkit and developed it a lot. LinkResearchTools (LRT) is helpful but not required to follow these rules. I recommend using fewer tools than too many in link building. The accessibility of domain-metrics has made “number craziness” worse than Google PageRank ever did. Some agencies working as “Digital PR” go in the right direction in acquiring coverage, attention, and links, as was even confirmed by Google. In my view, the best link building was always what you would describe as Digital PR today.

I love some of the things I see from digital pr, it's a shame it often gets bucketed with the spammy kind of link building. It's just as critical as tech SEO, probably more so in many cases.

— John (@JohnMu) January 23, 2021

The chapter structure was not changed since 2009, and you can tell the evergreen characteristics of these rules from that. While some may think that link building and SEO change a lot, they don’t so much.

The Impact of (wrong) Links has changed #

The most significant change in link building was the introduction of the Google Penguin algorithm. Google Penguin changed the world of link building and SEO completely. While before, low-quality links could barely cause any harm unless, in humongous quantities, the Post Penguin world now needs the SEOs to attribute a potential risk to every link. The chapter No spam! Low-Risk Links covers that aspect.

The Impact of NoFollow Links has changed (officially) #

The second significant change happened when Google finally - ten years after my recommendation to build NoFollow links, regardless - confirmed that they would count NoFollow links, too - if they feel like to. As you can tell, I’ve always built, and recommended building NoFollow links for the straightforward reason - they can matter. Just look at Wikipedia is what I usually say.

Told you so :-) #

With that could proudly say, “I told you so”, in this and many other regards for link building. Because since 2003 I wake up every day and think about links only. I’m not sure if there’s anyone more “link-minded” than me on the world, but if you are, I’d like to discuss links with you. Don’t get me wrong - there are many great link builders out there, but some of the best ones seem to remain below the radar these days like it was all the time. Let me know.

Enjoy & learn,

Christoph C. Cemper

1. Link Relevance, Link Location, Useful Content #

Links need to be relevant - but how? #

Links need to be relevant. THE essential requirement in link building, since 2005 for me.

Old concepts, old shortcuts #

But what does the relevance of links even mean?

If you ask people about the definition of the relevance of links, you probably hear some pretty old rules. Simple rules similar to what I’ve taught since 2005, just like the following.

Simple link relevance rules care about:

- Relevance on domain level (i.e. blog.golfing.com)

- Relevance on page level (i.e. blog.golfing.com/golf/the-best-golf-clubs )

- Relevance on keyword (“best golf clubs”)

And sometimes you hear about additional scopes of relevancy, like

- Relevance on sentence-level (“Here are the best golf clubs for beginners.”)

- Relevance on a paragraph level (a couple of sentences around the sentence above, talking about Golf obviously)

- Relevance on folder level (/golf/)

- Relevance on root domain level (i.e., golfing.com)

Sometimes you hear that there’s a pyramid of importance, similar to Maslow’s pyramid of needs, with the anchor text being the most important.

I’ve even seen a Maslow pyramid turned upside down, with the anchor text called the foundation (the big flat plateau on the bottom), when it’s the top of the importance. First, you need to get relevance right on other levels. Otherwise, all the “click here” links would be worthless.

I’ve been there, done that, got the T-Shirt.

I’ve been there, done that, got the T-Shirt.

All these concepts are simplifications, shortcuts to the essential reason.

Simple checklists to make standard operating procedure (SOPs) easier to write and understand.

SOPs for low-cost wage link building people in 3rd world countries whose work is marked up a couple 1000% for profit by some link building agencies.

However, there’s a lot more when it comes to the relevancy of links.

Natural language processing #

In 2022 and beyond, the simple approach to stuff your money keywords on all scopes of the link graph is just wrong. It might work in the short-term, but we see ranking drops and Google penalties every week still.

It significantly simplified it in 2009, but I explain the Google Smell Test in the next chapter on how to do it right - and natural.

You have to accept that Google gets better every year in understanding natural language and context, in many ways you would not expect.

We’re in a technological age where you can convert a random photo into a music video of the person singing. There’s a lot more to link analysis and evaluation of links than just looking at a bunch of keywords on all scopes of the page.

Google is not a bunch of PHP scripts that look for keyword mentions on various levels of the page and domain; it never was. So teaching such a “hierarchy of relevance” is just not enough for a long time already.

In addition to that, how many golf websites are out there, naturally, that recommend golf clubs for beginners? That type of content is the random affiliate content or filler for any publisher incentivized by impressions and ad revenue (like Forbes, Business Insider, and the like). You will find them all if you use a natural way to search for links with traffic to build instead of just going through a database of WordPress sites on expired domains to sell links from.

Here’s a great example by Ammon Johns when asked

What does “relevancy” of links mean to you? #

Good question. To me, a link is relevant if it seems natural in the context of the text. Not a forced link and not irrelevant to the reader (even if it is a little bit of an aside to the main thrust of the content/topic).> For example, if in this comment I were to talk about how a book I always recommend to people regarding earning useful links is ‘Purple Cow’ by Seth Godin, and I made that a link to the book on Good Reads or Amazon, then despite that book not being directly about links, or relevancy, or even SEO, it is still a relevant link, and a good one.> However, if I were to tell you that while writing this comment, I was drinking my coffee that I made in my favorite percolator that I bought from Amazon, and included a link, then that’s a lot less relevant, even if the previous link had gone to Amazon. It’s merely more of a stretch from the * reader’s* intent and interest, and so less relevant to their needs and interests.> That second link still isn’t wholly irrelevant though, because within the specific context of an SEO group, little insights into the lives of a so-called ‘famous’ SEO can still be of interest to people, and we SEOs do generally have a high interest in good coffee from all those late night jobs where the inspiration has taken us, but we need the caffeine to keep going.> Now, did you notice how the last paragraph above actually increased the relevancy of the hypothetical link to the percolator on Amazon?> That’s relevancy.

There are many more examples by others, but I found this one most comprehensive in explaining the trickiness of relevance and why every link needs to have a story.

Relevant Links have a Story. What is yours? #

In my experience a link can be considered relevant, if it has a story. If a link has a story, it most probable also passes the “Google Smell Test”.

The Google Smell Test. #

What Ammon did above gave us a story for the link.

A good sniff-test of whether a link is relevant or spammy is whether you’d feel comfortable showing it to a Google spam engineer.

Imagine you are sitting in a bar, next to a Google spam engineer, and you show him your links.

What if the spam engineer would show you spam examples? Click here for some of them.

What do you think the Google spam engineer would say about:

- your 38 links* from Golf-websites talking about golf clubs for beginners? And those

- other 62 links* from “Hobby bloggers” discovering a new highly commercial topic every additional hour and writing about it

- and 89% links* of those on well SEOed websites, with excellent stock images on high-value premium WordPress templates.

- and most of the WordPress sites linking to you showing a sharp decline in their links developed over the past 2-3 years of LVT -99% (we can spot a sharp drop in LRT using the Link Velocity Trends or LVT metric being very negative, but you can click through to the link development charts for every link as well if you feel like to in Ahrefs, Semrush or other tools - they all provide some kind of indicator as a chart)

- getting the impression that your “strongest links” are on a network of rebuilt expired domains that were rejuvenated with updated content, lovely templates and maybe even some linking from within the same network.

The Google spam engineer would probably say something like this:

Oh look, lots of websites that changed ownership in the past years and are now significantly narrowed down in both topics and general traffic patterns. Also interesting that while they published some more content related to your topic, they didn’t acquire lots of links to it.> Why do you think they linked to you?

Can you answer that question?

Does each of your links have a story to tell?

Now to tell the story you would of course need to go through each linking page and read their story. And if the only connection between your website and theirs is a linked keyword (from a highly suspicious, maybe hacked niche edit) or a sentence or maybe paragraph talking about your “best golf clubs for beginners”, then it’s really time to think about possible link devaluations or link buying filters or penalties you may have already.

John Muller

Gary Illyes

Matt Cutts, former head of web spam

Matt Cutts, former head of web spam

Throughtout the years, I’ve seen obvious link spammers get away for a while, “build their empire,” only to see them drop after their links got filtered out or they even received a penalty.

If you work hard enough on your link spam, get successful, and big enough in the search results, then Google will catch up with you.

Then you should have a story for every link… or Google will find and penalize you.

Click here to see 11 real examples of which pages were called spam by the Google spam team.

Link Location should be in content - but why? #

Links in the content means they are words of a page linked, instead of links in navigation, headers, or footers

You’ve probably heard the advice that links need to be in-content, need to be inside an article, a paragraph talking about you and your business, with keyword-rich anchor text.

But WHY should the links be in-content?

After all these years I’ve come to the conclusion that the recommendation to place links “in-content” is just too simple, as shortcut, as outlined above.

It’s easy to put that “rule” in a blog post, a presentation, and an SOP for your link building team; I did the same. Great.

When we place links into the body content, the purpose is to make the link more likely to get clicked.

When you hear boilerplate advice that a link should not be

-

in the header,

-

in the sidebar,

-

in the footer

-

behind images

…or other link locations, then that’s an over-simplification, too.

There are quite some reasons why links could be in such link locations and would get clicked, like:

-

A big green button enticing the user to check out an additional article or offer about the topic

-

A very prominent call-out at the end of the article

-

A big fat yellow highlighted link in the site navigation

-

A sponsor banner among other sponsor banners

On the contrary, there are many reasons why users would NOT click links when placed in the body content.

A link would not get clicked, if

-

The link was embedded in totally boring gibberish “SEO content”, that nobody wants to read anyways.

-

The link was “hidden” by CSS, i.e., the link would not be recognizable as clickable (yes, SEOs did that believing they help themselves)

-

The anchor text phrase that the link was embedded with is just a meaningless “here” or “click for more”. There’s nothing wrong with giving the user an indication of what he may find. This also helps Google understand the connection between the two pages.

These are just some brief examples to emphasize that we’re trying to place links that matter for the user, the visitor, the human - because that’s what Google tried to emulate as well.

All those genius minds working for Google have just one goal: build a machine that can understand the web like a human - and lots of “human data” is being fed to that machine.

If you read just one Google patent (I recommend to read many), then read about the Reasonable Surfer and its update from 2016 that made it more reasonable - Bill Slawski does a great job reading and simplifying search patents for over a decade.

The reasonable surfer patent also helps you understand that the web is just content and links. Google’s goal is to understand how users navigate the web. You can NOT use hyperlinks to do so. That’s the whole point of the web, content, media, and hyperlinks.

So if you come across some theories that Google would not use links or is trying not to use links, then think about the reasonable surfer by Google.

Think about how people would navigate web pages. Most people get it, but a couple of well-made presentations by a good marketer confused half of Germany some years ago about the concept of content and links as the two parts that make the web.

Click here to hear it from Google

(this is again a legendary event with Ammon Johns)

[/warning]

So there goes your ** 142nd link from a website about Golf** and all the types of golf clubs and rules on dozens of very similar pages written specifically about Golf, with a link to your golf store embedded.

Which humans need to, or want to read all that similar fluff about the best golf clubs for beginners? 80% of these similar links are probably entirely ignored by Google.

Remember to think about the story for these links. What’s the point of them being there? How do they add value?

Or are they just as useless content polluting the web, like these 11 real spam examples?

Of course, you want a page talking about your industry, business, products, or topics relevant to you. Of course, you want an experience of golf players being told, linking to your affiliate site offering just the right golf club for beginners. But wouldn’t a legit recommendation today already point to the brand manufacturing that golf club, or at least an Amazon result page?

What is the value that YOUR page contributes to the world?

Build links to useful content #

If the page you’re getting links to is useful, then you suddenly have a part of your story checked off!

YOUR page (where the link is pointing to) has to be useful, not just lovely.

Unnatural links point to useless filler content. #

Nobody in his right mind would recommend, and therefore link, to low quality content without incentive.

By looking at the target page’s usefulness and therefore quality, Google may be flagging potential unnatural links. You can think of user experience metrics here, but your gut feeling will tell you anyways.

Outreach for poor content is ineffective. #

Your outreach won’t be successful for poor content.

If you reach out to potential link opportunities or influencers, authors, news sites, associations and present them the “piece” you hope to get links for, you shouldn’t get your hopes too high if the page doesn’t have a right angle.

The web is full of poor content, and nobody’s going to spend a minute linking to yours if you don’t provide something extra.

Bad Rankings for lousy content. #

Getting links means getting traffic and rankings.

Google will benchmark your content, not only the links it gets but the user behavior they track when people consume the content. If people don’t like your content, then Google doesn’t like your content.

The web is full of mediocre content, and there are only ten organic results on the first page.

Suppose your content is not interesting to read, engaging the user, comprehensive in nature, and therefore not answering the users’ questions. You shouldn’t expect to have good rankings for your content, and also results in link building.

Basic criteria of useful content #

The question what good, relevant, useful content is is probably as old as the web, or humanity itself, so this is a basic approach.

Questions to ask for great content #

- Is there enough tension, a red line through the article, that makes the user want to read it in full?

- Can the reader feel the writer’s love and passion for the topic, or was he crunching out filler content towards a contractually given word limit?

- Are the images supporting the text, or are they just some random stock images added so “there are also images?”

- Can you imagine that some readers re-read the article, at least partially?

- Are the details given in the article accurate, trustworthy, well explained, and with source credits? This accuracy is essential for more “technicals” topics (finance, SEO, marketing technology, link building) and a requirement in any scientific publication.

- Can this article be found somewhere else, in a more helpful version?

- Would you consider an article to represent that best article on the web on the topic? If not, what is missing? Can you at least link to those places expanding the missing parts? Remember, the web is built on links, and no article can and needs to contain all information, but linking out for detailed discussion and deeper explanations is what made the web successful in the first place.

- Can the user easily consume the content? Think about the list, call-outs, in-article navigation, spacing, layout, font sizes, and other elements you would maybe rather see in “UX” jobs.

Please note: we have 1000s (shall we say 37.937*?) more spam examples and have used them for training Link Detox Genesis since 2012, but also link spam analysis like this.

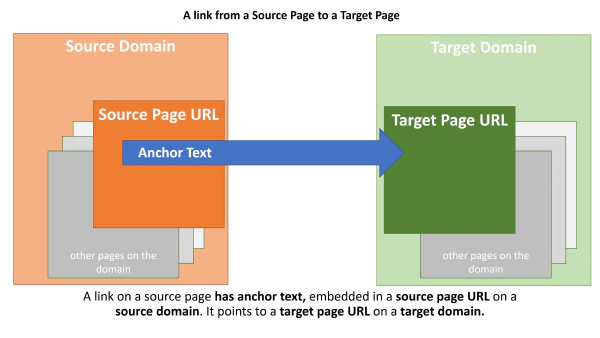

2. Domain Trust #

Domain trust concerns the trust of your domain and the trust of the domain linking to you.

Building trust with trusted links #

You need links from trusted sites to become trusted!

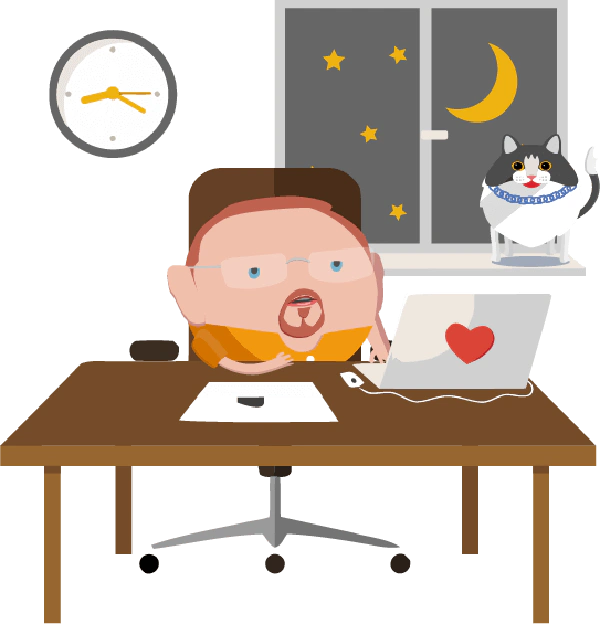

Both ends of a link are essential, especially when it comes to trust.

-

the source domain (linking from the domain, where source page A sites)

-

the target domain (linking TO domain, where target page B lives)

The higher the trust of your target domain, the more you “get away” with it. #

Did you ever wonder why Amazon still performs so well yet has so many (cloaked) affiliate links from (meanwhile) penalized affiliate spam websites?

Also, probably every sold link ever had a “good co-citation” from Wikipedia for years, as link builders did oversimplify that concept, too. Wikipedia didn’t take any damage from it, as you probably expected.

Measuring trust with proxy metrics #

In the past, domain trust was measured using proxy metrics such as

-

domain age (old domains tend to have more trust if they have trusted links)

-

brand names (real life brands tend to have more trust if they have trusted links)

-

co-citations (websites being co-cited with other trusted websites tend to have higher trust links)

-

.edu links (university websites tend to have high trust, and being linked there tends to pass varying amounts of trust)

All of these factors, and more, can affect the trust rank of a domain indirectly.

Measuring trust with the Trust Rank patent #

Since 2011 there is a metric available that implements the Trust Rank patent that is a lot more accurate than these old approximations.

Using LRT Trust in LRT, we can measure trust on all link scopes (Root domain, Host/Subdomain, Page-level).

Trust measures a distance from a trusted seed set of websites, the most reputable parts of the web.

The LRT Trust metric is a critical metric that helps decide if a link shall be built or disavowed and influences the more advanced LRT Risk metric even more.

Still, in 2022 many popular SEO tools sometimes described as competitors to LRT (like Ahrefs, Semrush, Moz) do not have a metric that measures trust similar to the trust rank patent. Some try to use proxy metrics like referring domains or organic traffic to try to gauge an authority. Still, the quality of traffic, for example, is not being taken into account. The only metric comparable we know is Majestic Trust Flow.

Many link sellers use simplified domain-based metrics, like Ahrefs DA or Moz DA, and sometimes clients get scammed by link sellers because of that. However, link buyers sometimes specifically only ask for those insufficient and straightforward metrics - which brings up the question who’s ultimately responsible for educating the link buyers?.

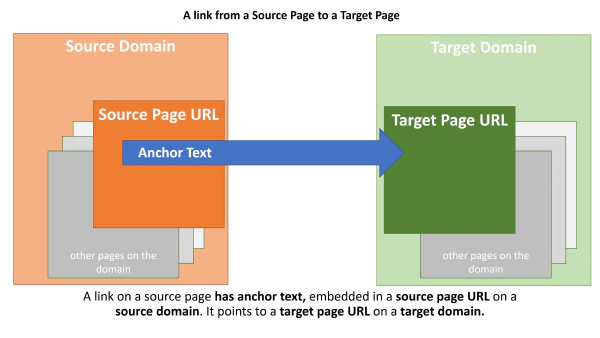

Building trust with good co-citations #

Trustworthy co-citations have been and are still of great importance but are little talked about. I did so in this post and many others before

A more familiar term when it comes to link building is ** “bad neighborhood”**.

Bad neighborhood, however, is sometimes simplified as “spammy websites on the same web host”, but that shouldn’t be much of your concern, not in 2009 and especially not in 2022.

This is simply a particular case of co-citation, but you being co-cited with spammy or even toxic websites. That can happen when you go on a buying spree in paid link networks, PBNs, or other artificial link patterns.

Link Detox introduced just a few basic co-citations and network rules in 2012 and already found so many problematic and outright toxic links causing penalties that we were surprised Google didn’t take action sooner than in 2012 about the (spammy) state of link building.

Example for bad neighborhood #

Even if you are not directly linking to spammy sites, if you are on pages linked together with spammy websites or other link buyers, you already have a problem.

This is true on a page-level as in the simple Sunglasses paid link example from the Linux site I’ve been using since 2005 to explain bad neighborhood, but also a domain-level. So if you have your links in posts of domains where a lot of other link buyers have their links, you may be in trouble.

Who else has links where you have? #

Be careful who is linked WITH you - now and also in the future.

What trust rank means is that link quality is relative: if your domain is trusted, it will respond differently and more quickly to links.

You should therefore try to build TRUST and not only LINKS!

That doesn’t work by slapping a Wikipedia link into each of your 300 words “High-Quality LSI Post”, however.

3. Use Natural anchor text. #

Natural anchor text distribution has been essential since 2009 and is still.

In the Google Penguin penalties, we saw so many over-optimized link profiles, with all the links for the money keywords, it was a mess. But still, today, a lot of problems arise from overdoing the anchor texts and using the same keyword over and over again.

The best and most natural way will be if every anchor text is present only once. The only exception would be your brand name and variations of it, including your domain name.

Don’t obsess about anchor text; your links will not look natural!

Obsessing about commercial anchor text was wrong in 2009 and still is now.

Abstract example of natural anchor text #

One abstract example of a natural anchor text distribution could look like this:

| Keyword | Presence in % |

|---|---|

| [brand name] | 27.0% |

| [brand name plus some stuff] | 19.0% |

| [keyword and brand name plus some stuff] | 11.0% |

| [domain name] | 7.0% |

| [weird keyword and some stuff] | 1.3% |

| [weird keyword2 and some stuff] | 1.2% |

| [weird plus money keyword and some stuff] | 1.1% |

| [money keyword] | 0.1% |

The context of the linking page, the linked page, and both contents are essential, of course, today more than ever.

Don’t get fooled thinking that you only need to have “the right anchor text”; those times are over. But anchor text remains a crucial element in link building, and if you can influence it on low-risk websites, then do that.

We’ve seen overly cautious SEO teams build their links only with the brand name and ZERO links with the URL as anchor text. Well, that’s not enough in some industries. Having relevant keywords in your link profile is not only natural but also required for good rankings.

The rules are different in every country, language, industry, topic, sub-topic, keyword group.

Make sure you do your competitive research to learn the rules for your country, language, industry, topic, sub-topic, keyword group. Many people believe there’s a one-size-fits-all SEO ruleset, but that’s the wrong belief.

Learn about keyword classes like money keywords, brand keywords, and compound keywords.

Real example of natural anchor text #

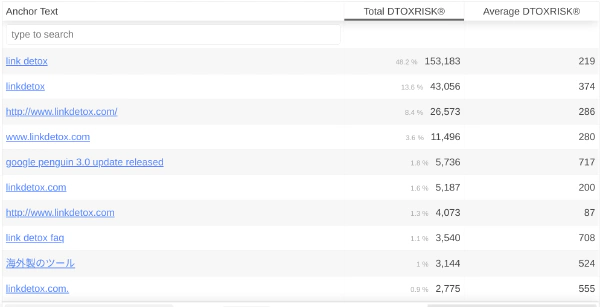

You see a very similar distribution in the link profile of LinkDetox.com - the domain we established for the trademark Link Detox® in 2012. Can you spot the money anchor text phrase?

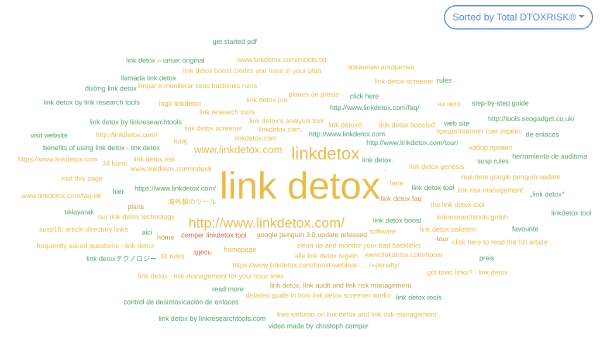

Natural link profile as a cloud #

Here is a keyword cloud that gives you a great idea of the risk of those links - only a few red ones are problematic.

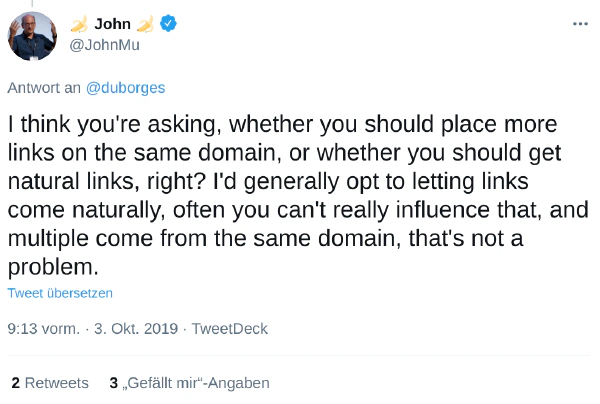

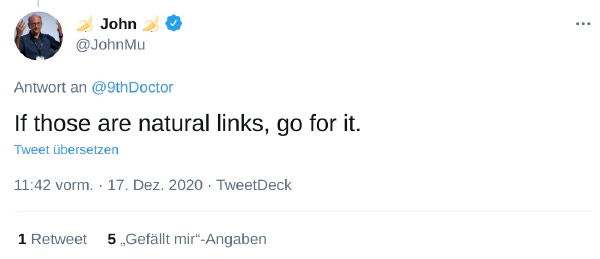

What is a natural link anyways? #

Matt Cutts, former head of web spam

Matt Cutts, former head of web spam

Gary Illyes

Gary Illyes

John Muller

John Muller

A natural link looks like it was built by a user, not an SEO. Regular users don’t care about anchor texts, and so if all your links look like made by an SEO, you are in trouble!

What this means is that you have not only high-value money keywords in your links, but also some links saying “cool site,” “brand name x,” “click here,” or even a longer phrase.

Don’t over-optimize!

Matt Cutts, the former head of web spam once said that…

…the objective is not to make your links appear natural; the objective is that your links are natural.

And, John Muller and Gary Illyes have confirmed this in the one or other way

4. Build NoFollow links. #

Include some NoFollow links in your link profile. NoFollow links are natural.

If you build links, do you build NoFollow links on relevant pages as well?

Of course, you do, we hope.

We have recommended building, analyzing, auditing and disavowing (spammy) NoFollow links since 2009 - and have done that for our clients since 2005. Yes, you read right - disavowing toxic NoFollow links also had a helpful effect.

Why? Because regular users don’t care about NoFollows tags. And you should not care about the NoFollow tag either - latest since 2019.

If you ask the everyday guy what a NoFollow link is, he will have no idea what that is and won’t care - because he doesn’t have to.

Don’t be a link builder, be the everyday person building your links.

Your links should look like they have been built by normal users, and so you should also include NoFollow links.

In 2014, we got confirmation that spammy NoFollow links can cause Google Penalties.

In 2019, we got official confirmation from Google that they would count NoFollow links.

There you go; we knew it all along.

I told you so over 11 years ago :)

5. Links on Juicy pages - Links with Traffic. #

Since 2009 I told people to get juicy links.

https://www.youtube.com/watch?v=3nAbvbKBwdM

When a page is juicy, that means the page ranks for various keyword phrases and that it passes that context to you if you have a link on it. Even regardless of the anchor text, better with targeted anchor text. A webpage that does rank for a phrase or keyword vital to you is a page that you want to have a link on regardless of PageRank or any vanity metrics from SEO tools.

Some people also call that “Links with Traffic”.

Back in our time as link building agency (we closed 2012 to avoid conflicts of interest) we built a unique, very thorough working “CEMPER Juice Metric” that could measure the juiciness of a page, regardless of any artificial keyword- or visibility index.

These days people refer to the “links on juicy pages”, more as “links with traffic”, which is funny because it still does not imply that those links send traffic to the target at all.

A golden link gets you “referral traffic from links with (organic) traffic”.

The video “Links with Traffic” goes over the definition and how you can find links with traffic, for free or with paid tools like Ahrefs, Semrush, Sistrix, and LRT.

[warning]

Be aware that most people refer to link juice as Pagerank (or Link Power) being passed on or not, and not how much ranking power that page really has.

I am not sure who coined the term “Link Juice” long ago, but it could have to do with the mention of the juiciness of pages.

[/warning]

6. No spam! Low-Risk Links only. #

Watch your link neighborhood. Don’t build links in a bad neighborhood.

The advice to build links in a good neighborhood, free from spammy neighbors, has not changed since 2009, but the tools have a lot.

You don’t want to

-

have links on duplicate content pages like article directories and article sites.

-

add links into pages that are years old without changing their content (also called “niche edits” in 2022, and they are highly risky and sometimes hacked into websites).

-

have your links in a bad neighborhood.

-

have your links on websites known for selling links.

-

have your links on websites that experienced a Google Penalty.

-

have your links on hacked websites or websites that are spreading malware.

After all these years, we have a simple metric to cover this topic, and it’s called LRT Risk.

You can use the Link Simulator to estimate that.

I am not aware of any other software besides LRT and LRT Risk that measures link risk for potential links, and neither are link professionals like Rick Lomas (when asked Feb 22, 2021).

7. Don’t buy links for PageRank (or DR or DA) #

Super old screenshot of the Google Toolbar with their PageRank display by Searchengineland.

Super old screenshot of the Google Toolbar with their PageRank display by Searchengineland.

Initially, people were buying PageRank. Yes, the times of Google Toolbar PageRank are long over - but the mindset is still out there.

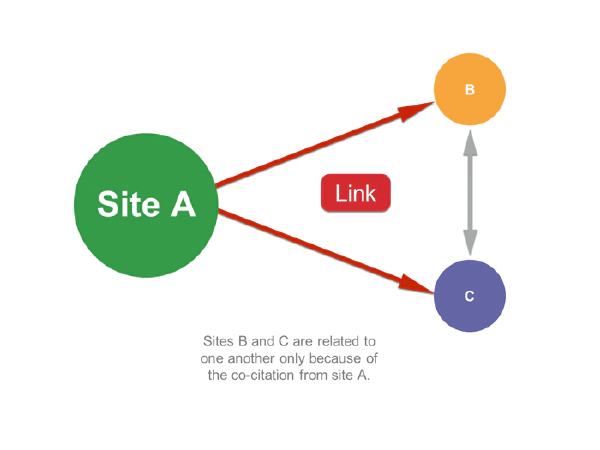

People now often evaluate links on domain-level metrics like Ahrefs DR or Moz DA, which is even worse than Google PageRank.

It’s nonsense to use aggregated metrics combining signals from all pages of a domain to judge on a single page.

Based on their internal and external links, that could be a useful page or a worthless orphan page, and therefore also no link juice.

I advised against building links for PageRank only back in 2009 and before. But at least PageRank was a page-level metric.

Do not use merely quantitative metrics like Ahrefs UR, Moz PA, or even LRT Power. Those are just like the old PageRank that I advised against in 2009. These metrics measure, maybe via a logarithmic scale, the number of links or domains - and those could be millions and still be ignored by search engines like Google.

Epilogue #

John Muller just confirmed that it’s not about the number of links, but the quality of links. This was true in 2009 and still is today. And he did so in an orange T-Shirt :)

SEO Metrics that only focus on the number of referring links or referring domains are misleading.

The total number is entirely irrelevant, he said.

I agree and would add that this is especially true if there are no other measurements involved, like traffic, risk, or trust.

In LRT, you can spot very weak links that have trust. Those are the links to keep. Just because a link is weak based on it’s “link graph” and the “number of referring domains” doesn’t mean anything for it’s power. Unfortunately, several SEO experts claim that weak links are bad, may even need to be disavowed. This is only necessary if those links have high risk and zero trust, based on our experience.

If you look at page-level metrics, we recommend using LRT Page Trust; it inherits Domain Trust if the page is well linked.

TL:DR Actionable advice #

People that don’t have time or focus to read long posts always ask for “actionable advice”, so here’s a TL:DR.

- Don’t use domain-based metrics like Ahrefs DR or Moz DA for qualification and pricing of links. They are Sunshine SEO metrics.>2. Schedule some focused time to read and think about the Golden Rules of Link Building

To your success!

Christoph C. Cemper

I am waking up every day thinking about links.

PS: and you can get the spam examples that Google spam engineers showed us, for free, with the CTA below

Special Thanks #

Some people have engaged with me more than average and have helped make this post even better

- Jake Sacino who’s writing on https://webpop.io and working in the agency Ranked.net.au for fantastic comprehensive feedback on the whole post, the funny bum sentence that made me laugh as a lead-out and so many other great things that improved this post.

- Ammon Johns for ultra-useful and dense explanations on the web and Facebook in general, and his constructive example for relevancy (that made it into this post, while I had so many more I could only screenshot)

- Lyndon NA (Darth Autocrat) for giving me feedback, ideas, and ultra-comprehensive Twitter threads as the answer for so many thoughts I post there. There’s a high chance he influenced me in many ways, like getting my stuff together and actually re-doing this post (again).

- Jim Boykin of Internet Marketing Ninjas, one of the real legends in link building. Thanks for so much, starting with a day in Yosemite in 2005 where I learned too much from him in just a day alone.

Appendix #

Prehistoric posts and videos on the rules of link building #

-

Original Golden Rules of Link Building blog post from 2009 in Webarchive and

-

the related originating slides on Slideshare from May 2009 and as well as

-

the original chapter structure

-

the recording of my talk “Advanced Link Building 2009” in Part One, Two, Three.

SEO Poll: Relevance of links #

Polls on Twitter and Facebook results in various opinions and definitions on the relevance of links.

https://twitter.com/cemper/status/1363071290068893698

Footnotes #

* when you see exact looking numbers, then that’s sometimes a “copywriting trick” from the direct response marketing world of the 60ies. It lures people into assuming very high precision and trustworthiness. The opposite is often the case, as you can tell from subject lines such as “** Here’s how I made $ 123,544.52 with only 1 hour 30 work**” which are probably totally made up numbers, but boost conversions for decades. Here are some good posts from Copyblogger or Persuasion at work on the topic.

Post status #

Note: you are reading a draft version with only 6774 words of this article; more to be added.

Still TODO:

- adding more examples

- adding more links

- review

- proofreading

- layout

- translation